Continuing from the previous two installments, fiisual’s 2026 Outlook series now moves into Part 3. Readers who have not yet reviewed the earlier articles may wish to refer to them first.

2026 Outlook Series Part 1 – What’s Next for the Global Economy in the Age of AI

2026 Outlook Series Part 2 – Key Industry Focus: ASIC

Key Industry II: Thermal Management

Thermal management is another industry set to enter a phase of rapid growth in 2026. Driven by AI, chip performance is advancing at a fast pace, with TDP continuing to rise, significantly increasing the importance of cooling systems. As the high-power GB300 GPU enters mass production in 2026, AI servers will fully transition toward cooling architectures designed for much higher heat flux density. Liquid cooling will become mainstream, pushing key liquid-cooling components—such as cold plates, QD (quick disconnects), manifolds, and CDUs (coolant distribution units)—into a phase of tangible accelerated growth.

Thermal Design Power (TDP) Refers to the maximum amount of heat a processor or component generates under standard operating workloads. A cooling system must be capable of dissipating this level of heat to prevent overheating.

The Thermal Industry Reaches a Technological Inflection Point: Liquid Cooling Becomes the Mainstream Trend

Historically, the thermal industry has been centered on air-cooling modules for general-purpose servers and PCs—a field with relatively mature technology and demand. However, with the rapid expansion of AI applications and the widespread adoption of high-power chips and high-density racks, the importance of thermal systems has increased dramatically. Cooling has shifted from a supporting role to a critical pillar of AI infrastructure.

Ensuring stable operation of high-power chips under long-duration, high-load conditions—while improving overall data center energy efficiency within constrained power and space—has become the core challenge facing the thermal industry.

Accelerated Transition in Cooling Technologies: Liquid Cooling Becomes the Dominant Path

Cooling solutions can be broadly divided into air cooling and liquid cooling. Liquid cooling further includes cold plates, immersion cooling, and two-phase liquid cooling, depending on application scenarios. As AI chips and data center compute density continue to rise and power consumption climbs higher, performance servers demand far greater cooling efficiency. Traditional air cooling is gradually approaching its physical limits, prompting thermal solution providers to shift toward liquid cooling as the key technology supporting next-generation AI infrastructure.

Comparison of Air Cooling and Liquid Cooling

| Air Cooling | Liquid Cooling | |

|---|---|---|

| TDP | 250W–500W | 700W–5,000W |

| Cooling efficiency | Moderate; requires many fans | High; directly removes heat |

| PUE | Higher | Lower; 20%–40% savings |

| Noise | High | Low |

| Space requirements | Large; more airflow and larger modules as TDP rises | Low; compact modules enable higher rack density |

| Cost | Low | High |

| Applications | General servers, memory, networking, PCs | AI servers |

Air Cooling Faces Performance Limits in the High-Power AI Era

Air cooling remains the most widely used cooling method for general-purpose servers and PCs, consisting mainly of heat sinks (fins, heat pipes, vapor chambers) combined with fan systems that remove heat via forced airflow. With mature technology, low cost, and ease of maintenance, air cooling has long been the core product line for Taiwanese vendors such as Auras (3324.TW), Asia Vital Components (3017.TW), Sunonwealth Electric (2421.TW).

However, as AI GPU/ASIC power consumption rapidly climbs to 700W, 1,000W, and even toward 2,000–3,000W, the physical limitations of air cooling have become increasingly evident. Higher airflow requires faster fans, resulting in rising noise, power consumption, and diminishing marginal cooling efficiency. More critically, air cooling struggles to handle ultra-high heat flux density, leading to throttling under heavy workloads and negatively impacting performance and system reliability.

Liquid Cooling Becomes the Core Cooling Solution for AI Servers

Liquid cooling works by circulating coolant through microchannels in cold plates to directly remove heat from the source, with heat then discharged to external cooling equipment via CDUs or sidecars. Compared with air, liquid offers much higher specific heat capacity and thermal conductivity.

As a result, liquid cooling can support far higher heat flux densities than air cooling, handling TDPs of 700W and even up to 3,000–5,000W, making it the most critical cooling technology for the rapid growth of AI servers. As AI models grow larger, GPU density increases, and data centers pursue lower PUE, liquid cooling not only improves heat exchange efficiency and reduces energy consumption, but also enables greater compute density within the same power and space constraints.

That said, liquid cooling comes with higher system costs and integration complexity. It relies on multiple critical components—including cold plates, microchannels, quick disconnects, CDUs, and piping—and places higher demands on leak prevention, coolant purity management, and overall data center cooling architecture.

New Cooling Architecture: Liquid Cooling for Core Heat, Air Cooling as a Complement

As mainstream AI servers increasingly adopt liquid cooling, its importance continues to rise. However, even with liquid-cooled servers, components outside high-power AI accelerators—such as VRMs, memory, SSDs, NICs, and PCB hotspots—still rely on fans and air-cooling modules to dissipate residual heat.

As a result, air cooling will not disappear with the rise of liquid cooling. Instead, it will transition from the primary cooling solution to a supplementary role, handling lower-power heat sources. Conversely, core cooling for high-power AI GPUs and ASICs will be fully taken over by liquid cooling. Looking ahead, data centers will adopt a dual-track architecture in which liquid cooling manages primary heat sources while air cooling handles peripheral components—forming the fundamental cooling model of the AI era.

Strong Growth Ahead for Liquid Cooling in 2026: Dual Drivers from GB300 and ASIC

GB300 Volume Ramp as the Core Growth Driver

Due to their higher power consumption and extreme heat density, GPUs entered the liquid-cooling era earlier than ASICs. As NVIDIA enters large-scale GB300 production starting in 2026, AI server cooling architectures will transition from the hybrid approach used in the GB200 era to a fully direct-to-chip liquid cooling design, driving rapid expansion across the liquid-cooling industry.

Rising TDP Drives Comprehensive Cooling Upgrades and Higher Liquid-Cooling Content Value

With improved cooling efficiency and the addition of CPX (Cooling Performance Extension), GB300 GPU TDP has increased to nearly 2,000W—well beyond what air cooling can handle. Liquid cooling, once optional for high-end systems, becomes standard in the GB300 generation. Under this upgraded architecture, the usage and value of liquid-cooling components—including cold plates, manifolds, QDs, CDUs/sidecars, and piping—rise across the board.

For example, a GB200 rack typically requires around 96–108 cold plates and roughly 360 QDs. With higher flow and heat density requirements, GB300 increases both cold plate and QD counts, with a significant rise in tray-level cold plates. As a result, the liquid-cooling BOM value per rack increases by approximately 10%–30% versus GB200. Overall, liquid-cooling content value for AI training racks has risen from several hundred dollars in traditional servers to roughly USD 20,000–30,000 per rack, becoming a major growth driver for the thermal supply chain in 2026.

| GB200 NVL72 | GB300 NVL72 | ||

|---|---|---|---|

| Cold plates (units) | 99 | 117 | +18% |

| QD (units) | 360 | 576 | +220% |

| Cold plate + QD value (per rack) | $23,490 | $30,870 | +31% |

Note: Comparison of liquid-cooling component content value for GB200 and GB300 (usage varies by CSP customization). Source: KGI Securities.

GB300 Mass Production Drives Parallel Growth in Liquid-Cooling Component Shipments

In 2025, Blackwell platform shipments remain dominated by GB200 NVL72, with GB300 still in the engineering sample stage and limited shipments. Entering 2026, GB300 officially enters mass production and volume ramp, becoming the core platform for next-generation high-power, high-heat-density AI training servers and accelerating the industry-wide shift toward liquid cooling.

Estimated shipments indicate that combined GB200 and GB300 shipments reached approximately 23,000–25,000 racks in 2025. With GB300 ramping sharply in 2026, total NVL72 shipments are expected to rise to 55,000–60,000 racks, representing roughly 130% YoY growth. Notably, nearly all incremental volume in 2026 is driven by GB300, whose near-2,000W TDP and CPX architecture significantly upgrade liquid-cooling configurations, lifting per-rack liquid-cooling BOM value by an additional 10%–20% versus GB200.

Overall, the GB300 ramp marks the official entry of AI servers into a liquid-cooling-dominant era. With higher specifications and usage of key components such as cold plates, QDs, manifolds, and CDUs, 2026 will see the thermal management supply chain enter a phase of accelerated growth.

Rapid Rise in ASIC Liquid-Cooling Adoption from 2026

While AI ASICs are designed for high efficiency and lower power consumption, the continued expansion of large-scale inference models has pushed heat density close to the limits of air cooling. By 2026, ASIC TDP is expected to approach the 1,000W threshold, beyond which air cooling becomes increasingly ineffective.

Even though no single ASIC exceeded 1,000W in 2025, leading CSPs have already completed liquid-cooling infrastructure deployment in preparation for the next generation of high-power ASICs.

Taking AWS as an early adopter as an example: Trainium 2 still uses VC air cooling, while the improved Trainium 2.5 introduced in 2025 has begun limited liquid-cooled rack validation. Trainium 3, scheduled for mass production in 2Q26, will formally adopt liquid cooling, rapidly increasing penetration. Although Trainium 3 TDP may not universally exceed 1,000W, high-density deployment requirements already necessitate liquid cooling in certain racks, highlighting the structural limits of air cooling.

Similarly, Google TPU v6 and Meta MTIA 2 will launch liquid-cooled versions, further confirming the irreversible trend toward ASIC liquid cooling.

At the system level, CSP-designed ASIC servers often adopt rack-scale architectures similar to GPU servers, significantly increasing cold plate and QD usage across compute and switching layers. According to both Morgan Stanley and KGI, once ASICs adopt liquid cooling in 2026, their liquid-cooling content value will approach—or even rival—that of GPU servers, making ASIC cooling the second structural growth driver for the thermal supply chain after GB300.

Overall, 2026 represents a critical inflection point for ASIC liquid-cooling adoption. With rising TDP, completed liquid-cooling infrastructure, and next-generation ASICs from AWS, Google, and Meta fully supporting liquid cooling, demand for liquid-cooling components will expand rapidly—driving strong growth across cold plates, QDs, manifolds, and CDUs under a dual-engine growth model led by GB300 and ASICs.

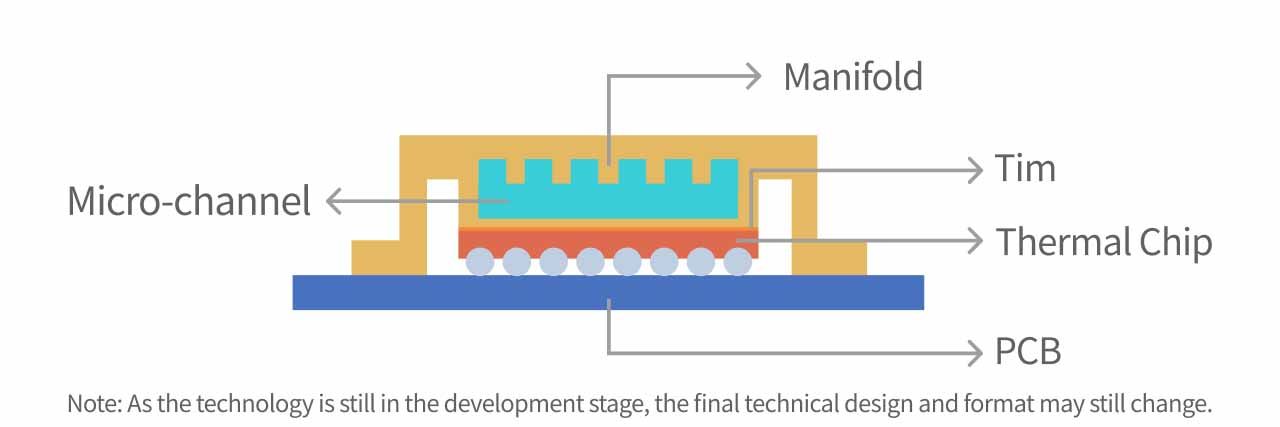

Micro-channel Lid (MCL): Not Yet Mature, Expected Adoption in 2027

As model sizes continue to grow and AI training and inference chip TDP rises, cooling solutions relying solely on external cold plates are nearing their technical limits. The industry is therefore developing more advanced technologies, with Micro-channel Lid (MCL) emerging as the most promising next-generation approach.

MCL represents the most advanced form of chip-level liquid cooling. Its core concept involves fabricating microchannels directly into the chip package lid, allowing coolant to flow above the die for direct convective cooling. Compared with traditional cold plates or microchannel cold plates (MCCP), MCL offers higher heat flux handling capability, lower thermal resistance, and a significantly shorter heat transfer path—enabling support for future AI GPUs and ASICs with power levels of 1,500–2,000W.

Compared with conventional liquid cold plates, MCL delivers superior efficiency, handling heat flux of 200–400 W/cm², and works in tandem with system-level liquid cooling to reduce overall thermal resistance in high-density rack architectures.

However, despite a clear technological roadmap, MCL faces much higher barriers to mass production. It requires advanced microfabrication, ultra-clean processes, close collaboration with packaging houses, and the integration of liquid channels without compromising package reliability. Currently, MCL remains in the validation and prototype stage and is not yet ready for large-scale AI accelerator production. CSP and GPU vendor roadmaps also indicate that cold plates and MCCP will remain the mainstream solutions through 2025–2026.

Given constraints around technology maturity, process integration, and capacity build-out, market consensus expects MCL to begin limited adoption no earlier than 2027, starting with next-generation GPUs and high-power ASICs. At that point, chip-level liquid cooling will complement MCCP to support 2,000W-class AI accelerators.

Taiwan Focus Stocks

2026 will be a pivotal growth year for the thermal industry. The supply chain can be broadly divided into upstream IC/system vendors, midstream air- and liquid-cooling components and system integration, and downstream rack assembly. Taiwanese companies are primarily concentrated in the mid-to-downstream segments, supplying key liquid-cooling components—such as cold plates, QDs, manifolds, and CDUs—as well as rack-level integration services.

Looking ahead, industry growth in 2026 will be driven by two major factors:

- A significant increase in liquid-cooling content value per rack

- A doubling of liquid-cooled rack shipments

Given the extremely high reliability requirements of liquid cooling, CSPs tend to deepen cooperation with existing suppliers due to historical leakage risks. As a result, Taiwanese companies with NVIDIA/CSP certifications and proven deployment experience are expected to be the most visible and structurally advantaged beneficiaries in 2026.

Asia Vital Components (3017.TW)

Asia Vital Components is a leading Taiwanese thermal solution provider, spanning air cooling, liquid cooling, and full rack-level systems. Its product portfolio covers DC/AC fans, cold plates, MCCP, manifolds, and integrated liquid-cooling solutions. Its competitive strengths lie in:

- Strong vertical integration and extensive mass-production experience, enabling execution of complex liquid-cooling modules

- Long-term partnerships with NVIDIA, AMD, and major CSPs, with deep involvement in GB200/GB300 GPU liquid-cooling modules and multiple AI ASIC projects

Looking into 2026, Asia Vital Components is positioned for growth driven by:

- Large-scale GB300 ramp from 2026, significantly increasing liquid-cooling content value versus GB200

- Securing multiple CSP ASIC orders beginning in 2026, boosting demand for cold plates, manifolds, and O-Rack liquid-cooling systems

- New capacity in Vietnam coming online through 2025–2026, enabling higher liquid-cooling order intake

Overall, supported by its comprehensive product portfolio, mature production capabilities, global capacity footprint, and deep Tier-1 GPU/CSP relationships, Asia Vital Components is well positioned to deliver strong growth under the dual drivers of GB300 and ASICs, reinforcing its leadership role in Taiwan’s liquid-cooling supply chain.

Fositek (6805.TW)

Fositek is a Taiwanese precision bearing supplier, originally focused on consumer electronics bearings, with foldable smartphone hinges as its core revenue driver. Through long-term partnerships with Chinese and Korean foldable phone brands, the company has built strong competitive advantages in precision machining, durability design, and high-reliability hinge technology.

Beyond its core bearing business, Fositek has actively expanded into AI server liquid cooling and is among the few global suppliers with mass-production capability for high-reliability QDs. Its products are widely used in data centers, AI accelerators, and rack-level liquid-cooling systems, and have passed certification from multiple Tier-1 CSPs. Its key advantages include:

- Comprehensive capabilities in precision machining, pressure testing, and hermetic sealing

- High-pressure, high-flow, high-reliability QDs with faster specification upgrades than peers

- Close collaboration with major liquid-cooling integrators, capturing rising AI liquid-cooling penetration

Looking ahead to 2026, Fositek is set to benefit from two structural growth drivers:

- Recovery in foldable smartphone shipments, more new models, and hinge specification upgrades

- Increased QD usage per rack driven by NVIDIA GB300 volume ramp, lifting ASPs

- Rapid adoption of liquid cooling for CSP-developed ASICs, significantly expanding QD demand at the system level

Overall, with leadership in precision bearings and strong positioning in AI liquid-cooling components, Fositek stands to enter an accelerated growth phase in 2026, emerging as a representative high-growth player across Taiwan’s liquid-cooling and precision mechanical components sectors.

Continue reading: 2026 Outlook Series Part 4 – Key Industries: Memory

Related article: Taiwan Industry 101: Thermal Management Industry